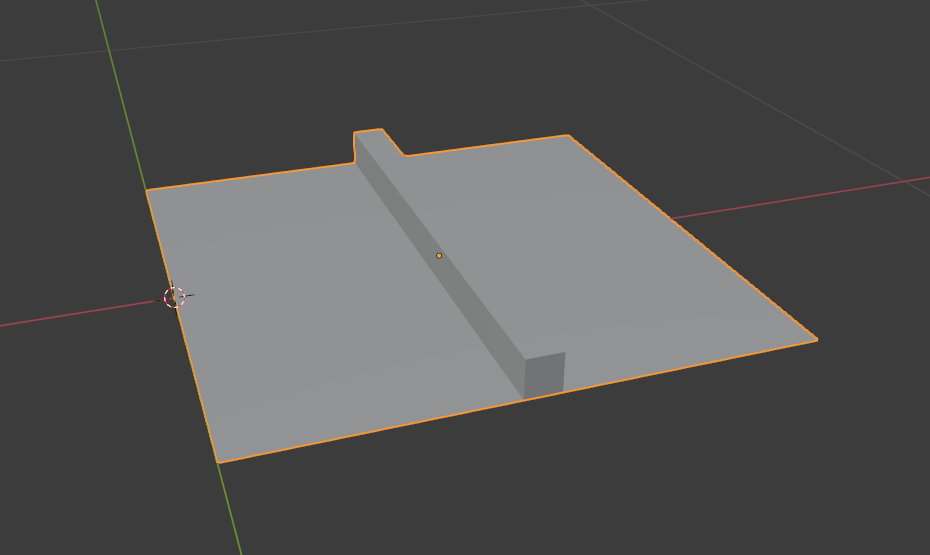

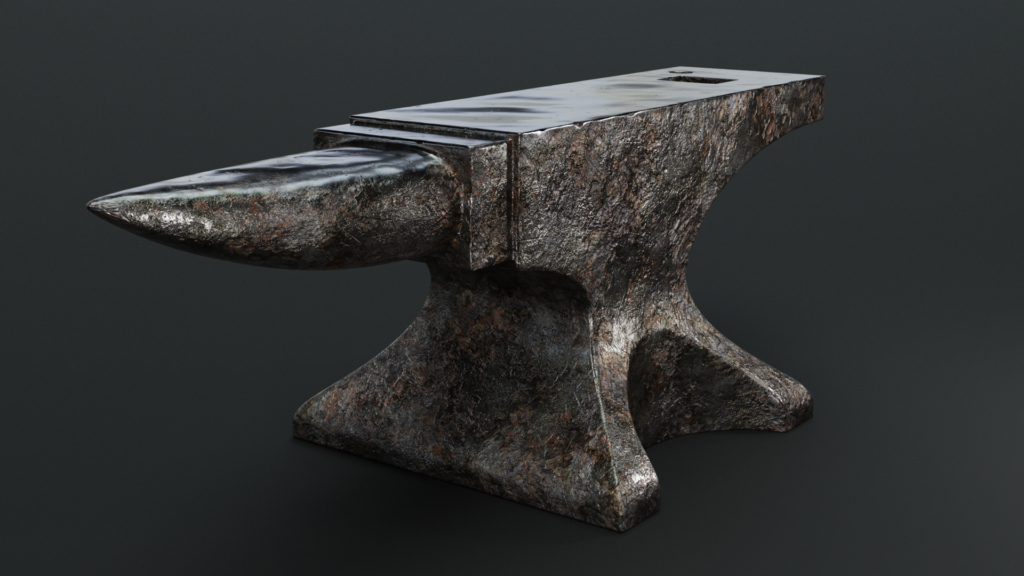

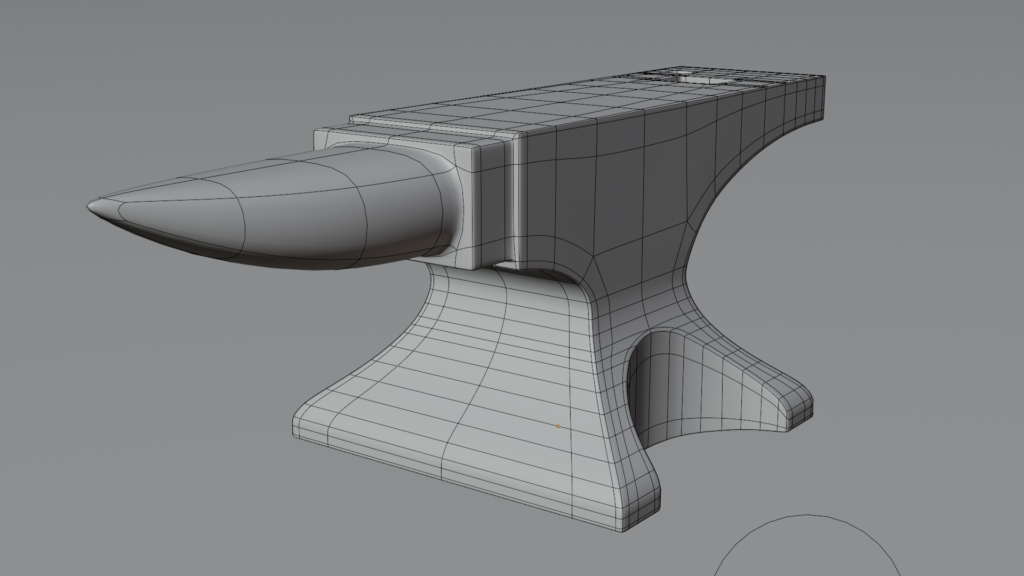

I have written about non-photo-realistic (NPR) rendering in Blender before, but typically the techniques rely on some hacks in the node editor and still require a lot of attention to look passable.

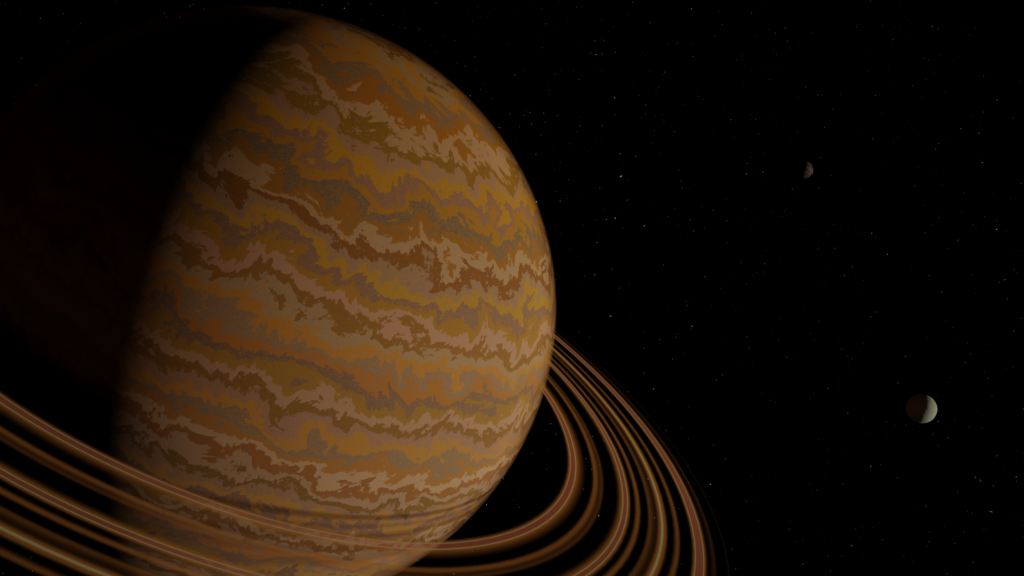

But I learned that there is an independent animation studio that uses Blender to create shorts with a style inspired by anime: DillonGoo Studios. Even more interesting, that studio has created their own custom build of Blender, GooEngine, which is how the studio creates their own shorts.

GooEngine adds new shader nodes to Blender that greatly improve its NPR abilities. For a full overview of the nodes and examples of how they are used, DillonGoo has an excellent introduction video. The first few minutes of the video also show the problems with the built-in “Toon Shader” node of Blender.

What is most exciting is that the custom features of GooEngine are now slated to be added to the main Blender source code! The DillonGoo studio developers are now official code contributors to Blender, and have even contributed valuable code to v4.3 (light-linking, something Blender has always lacked).

Take a look at the official announcement (video below) and some of DillonGoo’s anime-style shorts! Or if you’re feeling adventurous, download and try out the prototype build of Blender!